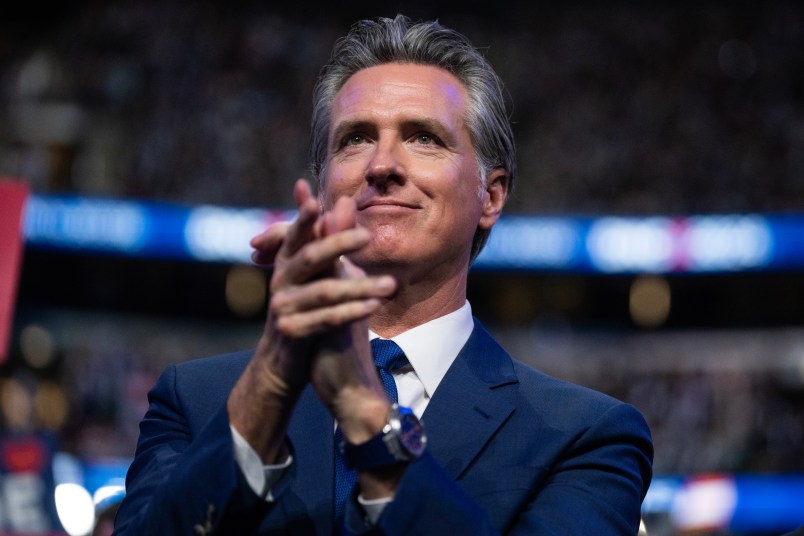

California Gov. Gavin Newsom (D) signed three artificial intelligence bills into law on Tuesday in an attempt to require social media companies to moderate the spread of disinfo and deepfakes — deceptive images, videos or audio clips resembling actual people — during elections.

The trio, including a first-of-its-kind-law, largely aims to ban, remove or label AI-generated deepfakes and other intentionally misleading content related to elections during specific periods.

While disinformation has played a growing role in American elections for decades, deepfake technology has in recent years advanced at a ferocious speed.

Deepfakes Ahead Of 2024 Elections

The 2024 cycle has seen an explosion of faked videos, prompting high-profile controversies.

In August, Donald Trump posted on Truth Social a deceptive, AI-generated image in which Taylor Swift appeared to endorse his candidacy — a move which backfired when the very popular singer instead endorsed Kamala Harris for president while warning of the dangers of deepfakes and misinformation.

In July, Elon Musk shared a faked Harris campaign video in which a manipulated version of Harris’ voice taking digs at her gender, race, her record as vice president and President Joe Biden. “This is amazing,” the tech CEO wrote with a laughing-crying emoji.

That episode prompted Newsom to preview the California legislation. “Manipulating a voice in an ‘ad’ like this one should be illegal,” he tweeted at the time. “I’ll be signing a bill in a matter of weeks to make sure it is.”

On Tuesday and Wednesday, Musk took to social media to mock the new California laws over several posts, suggesting they were violations of the First Amendment and would “make parody illegal.” The laws contain exemptions for parody, but one of the three requires parodies to feature a disclaimer in the run-up to an election.

The newly minted California laws come as lawmakers across the country have been contemplating how to regulate AI-generated political content to contain the spread of misinformation and disinformation aimed at confusing voters.

Over the last two years, Capitol Hill has shown sporadic interest in the ramifications of AI, specifically political deepfakes, as well as how to regulate them without undermining freedom of speech rights. Lawmakers on both sides of the aisle have introduced bills to try and address a complicated set of issues without success. State legislatures have also sought to address the problem: Over the past two years, 18 states have passed some form of laws to limit deepfakes in elections, according to Public Citizen’s tracker.

The California Bills

The California bills are “game changers,” said Oren Etzioni, the founder and CEO of TrueMedia.org — a non-profit dedicated to fighting political deepfakes — and the founding director of the Allen Institute for AI. If successfully enacted, the laws will force social media companies to react by building restrictions into their platforms, he told TPM.

The first bill signed by Newsom, which takes effect immediately and aims to limit the circulation of deepfakes, prohibits people or groups “from knowingly distributing an advertisement or other election material containing deceptive AI-generated or manipulated content” within 120 days of a California election.

The second, set to go into effect in January, requires AI generated audio, video or images in political advertisements to be labeled.

And the third one, also set to go into effect in the new year, requires social media platforms and other websites with more than one million users in California to label or remove AI generated deepfakes within 72 hours following a complaint. The bill also empowers “candidates, elected officials, elections officials, the Attorney General, and a district attorney or city attorney to seek injunctive relief against a large online platform for noncompliance with the act.”

“Safeguarding the integrity of elections is essential to democracy, and it’s critical that we ensure AI is not deployed to undermine the public’s trust through disinformation — especially in today’s fraught political climate,” Newsom said in a Tuesday statement detailing the three bills.

The laws will almost certainly face extensive challenges in court from social media companies and First Amendment rights groups. The creator of the video Musk tweeted filed a suit in federal court on Wednesday.

Etonzi expressed concern about how California regulators will enforce the bills, and about the provisions of the bills that require social media companies to identify and take action against deepfakes on their own.

“Self regulation is a little bit like asking the fox to regulate himself outside the hen house,” Etzioni said. “Not likely to be effective, and has actually proven to not be effective by and large.”

But if California prevails, Etzioni said, the state, as home to many leading social media and AI companies, could offer a roadmap for regulators across the country — or at the federal level — who are hoping to successfully curb the use of deepfakes.

In fact, Newsom signed the bills the same week that a bipartisan group of lawmakers, including California’s U.S. Senate candidate Rep. Adam Schiff (D), introduced a House bill that would federally prohibit political campaigns and other political groups from using AI to create deepfakes to misrepresent their rivals or their views. That bill would also give the Federal Election Commission the power to regulate the use of AI in elections.

I’m very tired of Elmo’s “1st Amendment” complaints.

He’s the first guy to block someone from X if they complain about him.

And a “cat.” California Mountain Lion.

This is needed legislatation and I don’t get the first amendment complaint. Fraud should not be protected by freedom of speech and that is exactly what deep fakes are, deception intended to secure a change in a voter’s behavior. Wait until the laws come out about deep fakes in advertizing.

And lookey here – Scientific American endorses Kamala Harris for President.

Donald will be crushed to see this news. He should feel better if he stays home and golfs.

Looking forward to John Roberts telling us that the Supreme Court gets to tell us on a case by case basis when an election related deep fake is speech and when it is not.

Go Gavin!

Quell the Muskosis!