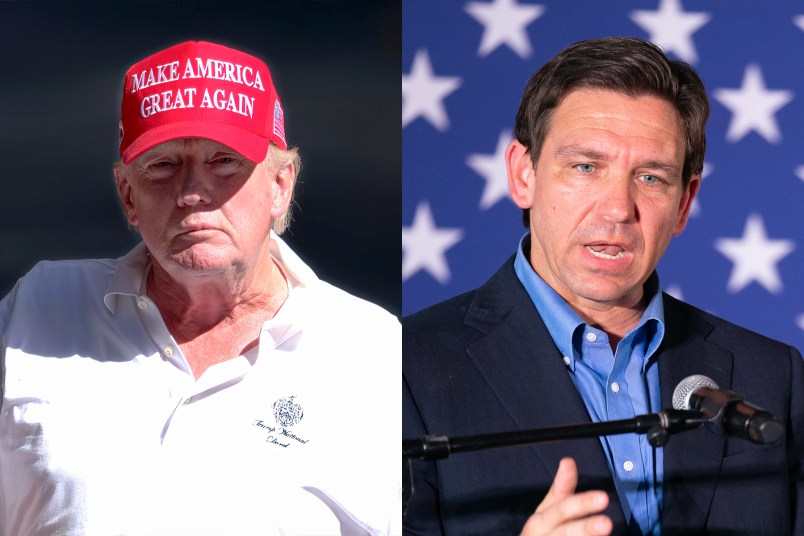

MAGA Republicans — who have been largely quiet around the dangers posed by artificial intelligence technology when it comes to the upcoming elections — might be jumping on the anti-AI bandwagon – but only after Florida Gov. Ron DeSantis’ (R) presidential campaign posted what appears to be a partially AI-generated ad this week, attacking former President Donald Trump.

The seemingly doctored images depict Trump hugging one of his favorite punching bags, Dr. Anthony Fauci.

Now, some of Trump’s most loyal allies are sounding off on Twitter, and warning against the use of AI-generated content in political ads.

“Smearing Donald Trump with fake AI images is completely unacceptable,” Sen. J.D. Vance (R-OH), who has endorsed Trump, tweeted on Thursday. “I’m not sharing them, but we’re in a new era. Be even more skeptical of what you see on the internet.”

“Those fake AI campaign ads need to be taken down immediately,” Rep. Marjorie Taylor Greene (R-GA) echoed.

For his part, at least one right-wing Senate Republican has been vocal about the risks of AI-generated content. Sen. Josh Hawley (R-MO) is unveiling a framework for AI legislation but his bill is focused on creating corporate guardrails around AI — like imposing fines for AI models collecting sensitive personal data without consent, restricting companies from making AI technology available to children or advertising it to them and creating a legal avenue for individuals to sue companies over harm caused by AI models, Axios reported this week. But Hawley’s framework does not include any points about the technology’s danger around misinformation and the upcoming elections.

The video — shared on the DeSantis War Room, the account the campaign uses to rile up MAGA fans on Twitter — is not the first political ad to include AI-generated content so far this cycle.

In April, shortly after President Joe Biden announced his 2024 reelection bid, the Republican National Committee (RNC) released an AI-generated ad supposedly depicting a dystopian future in which Biden has won a second term.

The ad served up the GOP’s usual dose of fear mongering — but this time backed with an extremely realistic AI-created image montage: China dropping bombs on Taiwan. Wall Street buildings boarded up amid a free fall in financial markets. Thousands of migrants flooding across the southern border unchecked. And police in tactical gear lining the streets of San Francisco to combat a fentanyl-fueled crime wave.

The RNC ad did come with a small disclaimer that read, “Built entirely by AI imagery” but was, according to experts, certainly an alarming glimpse into how the technology could be used in the upcoming election cycle to spread misinformation. Experts say the DeSantis video kicks those concerns up a notch.

“The [DeSantis] video is particularly pernicious because it mixes real and fake images to enhance its credibility and its message,” Oren Etzioni, the founding CEO of the Allen Institute for AI, told TPM.

He added he was “pleased to see that Twitter offers a note of ‘context’” below the video, explaining that the video contains real imagery alongside AI-generated imagery.

“However,” Etzioni continued, “it’s clear that the worst is yet to come” when it comes to AI-generated content being weaponized in the upcoming elections.

The campaign ad that received staunch criticism from Trump allies offers Democratic lawmakers like Rep. Yvette Clarke (D-NY), who have been sounding the alarm on how the rapidly developing technology can be used to spread misinformation in the upcoming election cycle, a new opportunity to press the issue.

“It’s clear the upcoming 2024 election cycle is going to be rife with AI-generated content and this ad points to the fact that this is a bipartisan issue,” Clarke told TPM in reaction to the DeSantis video. “Congress needs to act before this gets out of hand.”

In May, Clarke and some Senate Democrats — Sens. Michael Bennet (D-CO), Cory Booker (D-NJ) and Amy Klobuchar (D-MN) — introduced legislation to push for more oversight and transparency around the emerging technology, especially when it comes to campaign ads.

“The explosion of AI-generated content is outpacing the rules we currently have in place. This is exactly why I introduced the REAL Political Ads Act with Senators Klobuchar and Booker to update the guidelines governing political ads for the AI-era,” Bennet told TPM. “Congress should pass this bill right away — our democracy might well depend on it.”

There is currently no federal requirement to include a disclaimer in campaign ads when AI is used to create images, but their bill would expand the current disclosure requirements to include mandates for AI-generated content be identified in political ads.

Clarke previously told TPM that she is particularly concerned about the spread of misinformation around the 2024 elections, coupled with the fact that a growing number of people can deploy the powerful technology rapidly and with minimal cost.

And here I thought it was Trump who was the fake Presidential candidate.

Opposed to AI? Hell they are the party of AI!

Preview of GOP when the avalanche of fake AI attacks on President Biden appear – and they will:

I think the safest approach would be:

It would obviously be best to skip to #2 right away, but any person who today claims they are knowledgeable enough to do that is both a fool and a con artist.

We don’t know what the near-future of AI will bring, and we probably haven’t given enough thought to what kinds of disclaimers or restrictions will be necessary to preserve a functioning democracy in the era of AI, and it’s patently absurd to leave this powerful stuff completely unchecked while we do our homework. Until we know how to make it safe, it has to be categorically off-limits in domains that we value.

It appears that James O’Keefe has a whole new field of employment opportunities.