All the high-tech animations in movie theaters these days might look perfectly simple, but the facsimile of realism achieved by modern animation is a result of attention to details like the slight visual blur created by moving objects.

Historically, creating those blurs with computer programs has been an intense process computationally because of the amount of factors and data that computers need to process in order to render those blurs.

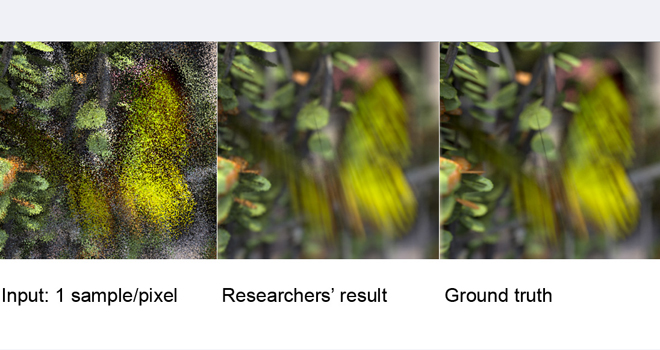

Now, a group of researchers at MIT and Nvidia, a computer graphics hardware firm, have figured out how to cut down the processing requirements and time needed to realistically render the blur created by motion in animations, MIT said late last week.

The papers describing the researchers’ work, to be presented at a computer graphics conference this August, promise to cut down the time needed to render such graphics to a matter of minutes rather than hours, MIT said.

The researchers simplified the calculations needed to be completed regarding the way rays of light from an imagined source would hit an object, and they reduced the number of points on a moving object that are analyzed for color to around 16 from 100.

The researchers who worked on the two papers are: MIT graduate student Jonathan Ragan-Kelley, Associate Professor Fredo Durand, who leads MIT’s Computer Graphics Group; Jaakko Lehtinen, a senior research scientist at Nvidia; graduate student Jiawen Chen; and Michael Doggett of Lund University in Sweden.