We may not have captured this completely in our coverage, but the brouhaha over the political science research project gone so wrong in Montana (and now in California) has been a very big deal in political science circles since the news broke late last week. I know some readers will be amused that anything could be buzzy in the cloistered poli sci world. But this has really captured the attention of everyone in the profession, and reignited some long running debates about field research, the proper ethical lines to draw during research, and a host of related issues.

We’ve mostly avoided going too far into that part of the story because, let’s face it, poli sci research dos and don’ts are pretty damn weedy and matter only to those who do it for a living. But TPM Reader Dan Carpenter, a professor of government at Harvard, wrote in with his insights on the mess, and I think it gives you a good sense of how big a deal some of these issues are within the academy and why this episode has received so much attention. And it’s just plain thoughtful and interesting. Here’s Carpenter:

My colleagues and I at Harvard are discussing this and thinking about how to discuss the issue with our graduate students. I teach the intro to political science course here and we’ll be talking about it for weeks if not years to come. I do think that Montana and California state officials need to chill out a bit. The universities are cooperating and I doubt very much that the academics here meant to “interfere” in any way maliciously with Montana’s election system.

That said, I wanted to raise a point that I see missing in a lot of this week’s discussions: that it’s one thing to experiment with voters, quite another to experiment with candidates, too. (See point 4 below.) This is not to say that what the Stanford-Dartmouth crew did is necessarily wrong or unethical. We still lack a lot of information there and we need a calm debate that avoids becoming a witch hunt. It is to say that it differs a bit from the usual turnout experiments in a particular way.

On the study itself, I’m still digesting things, but I see four issues here, in decreasing order of clarity:

(1) The unauthorized use of the Montana state seal in mailers. Unless I’m missing something, this just wasn’t smart.

(2) The miniature disclosure (rather implicit) that people were part of a study. I can see that the Hawthorne effect is there to worry about, but state-of-the-art is to make this very clear, sometimes to include it as a treatment condition.

(3) The question of whether Stanford should have also approved the experiment. (This is often an issue with multi-center clinical trials.) Dartmouth appears to have approved it, and now the Stanford spokesperson and their official letter says the University would not have approved it. (This as of Wednesday morning; the facts here could change.) This raises the question of appropriate venue-shopping and is another point on which to instruct our graduate students. If their name is going to be associated with an experiment, Harvard IRB should probably be in on the approval even if the experiment has already been approved by another school.

(4) The fact that the candidates were unwittingly part of, and affected by, the treatment. This is where I disagree with Chris Blattman and John Patty, at least for the moment. Blattman is right that we all “intervene” in the lives of our subjects (so too with observational research). But I think part of the discomfort here comes less from the fact that we’re intervening in the lives of voters and more in the fact that we’re intervening in the lives (or prospects) of the candidates.

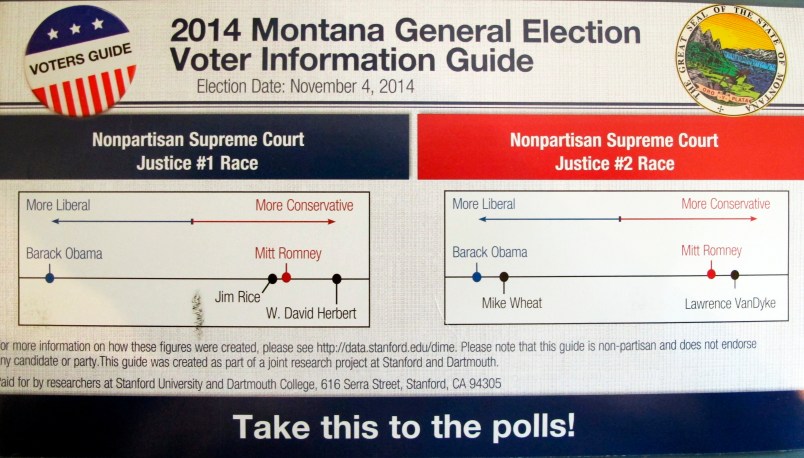

So suppose I’m a candidate running for selectman in Massachusetts (these are non-partisan elections) and Team Bonica estimates that I’m left of Che Guevara while my opponent is in the center. Unlike the Gerber-Green-Larimer turnout experiment, there really isn’t neutrality to this information. Especially the way that people read graphs, the probability that the information has zero tilt for the treatment group is knife-edge. The treatment directly affects those outside of the subject pool. (Of course there is always a plausible indirect effect with social contagion or what we later learn from the experiment, but the key here is direct effects.)

And of course there’s a positive externality here. Even with perfect or cluster-based randomization, people inside the sample can take the treatment information and run outside the sample with it, e.g. to the news, and that news coverage can then have an independent effect on the outcome.

In other words, if I’m in a non-partisan election and someone decides to place me and my opponent on an ideological scale along with major national (partisan) politicians and mass-mail the evidence all over the state, you can say that the election has become a lot less non-partisan.

Now we could respond that this is simply revealing information, and that campaigns do this all the time. But there are two countervailing arguments that suggest that it’s more than this. First, given an election that may not otherwise have lots of internal and external spending, it’s far less clear that this intervention is innocuous. At some level, this is the point that Sen. Tester is making about interfering with non-partisan elections. We could respond that voters deserve information about candidates that may reflect on their partisan leanings, but for better or worse, Montana has designed a system in which they wish elections to have as little partisan influence as possible. The crucial feature of this system is that the candidates do not run with party labels attached to their names, either in the campaign or on the ballot.

John Patty, a former colleague at Harvard whose creative mind I very much admire, has argued that non-partisan elections are not really so non-partisan. If he means that non-partisan elections do not have zero partisanship, he’s trivially right. You can, for instance, be a registered Republican and run for one of these offices. But these elections differ dramatically from elections for the state legislature, and once you look at the longer history of non-partisan elections, it’s clear that Progressives and Populists who put these institutions in place meant to minimize partisan influence in the selection of particular judicial and administrative officials.

So in a state whose institutions intend to minimize partisanship in one set of elections (judicial) and not others (the state legislature), it’s not clear that political scientists should feel entitled to engage in mass provision of party-tinged information in one (judicial) case just because campaigns do it in a whole bunch of other (largely legislative or executive) cases.

And second, the risk is to people outside of the subject pool. Suppose the following. I want to know whether the reputation of business owners affects the sale of the products of the companies they own. There are two major pizza shops in a town. I gather (publicly available) information on (a) the criminal histories of their owners and those owners’ family members and/or (b) what people said about them online. Then I randomly distribute summaries of this information to the residents of the towns in which they have their market.

Whether this is ethical is a question that would have to be considered. But in thinking about whether it was ethical — i.e, if I’m on the IRB — I would want to consider the effect not only on the subjects (pizza consumers) but also on people identified in the treatment mailings (pizza shop owners). This alone makes the experiment quite different from intervening in the lives of participants in a study of poverty and violence of the sort that Blattman cites. In those cases and in the Gerber-Green-Larimer treatment, for instance, publicly recognizable figures are not part of the treatment, and/or the treatment does not make those figures more or less publicly recognizable.

I’ll conclude that I don’t think it helps anybody to pursue a witch hunt here, and there is a risk of politicians, social scientists and others piling on a group of young scholars. I’m writing warily on that score, but I’m writing nonetheless because some of the recent commentary (published as well as what I’ve heard around the water cooler) suggests that the only thing that plausibly went wrong here is the mis-appropriation of the state seal. If that is all that political scientists learn from this episode, I think we’ve

fooled ourselves.